From RAG to Agents: The RL moment

RAG has something most AI tasks don’t: when a model retrieves a document and generates an answer, we can programmatically verify if it has retrieved the right document. This unique property transforms RAG into potentially the easiest problem to solve with reinforcement learning. While we’ve been hand-tuning retrieval strategies and obsessing over embedding models, the DeepSeek-R1 paper just showed us that with the right rewards, models can figure this out themselves.

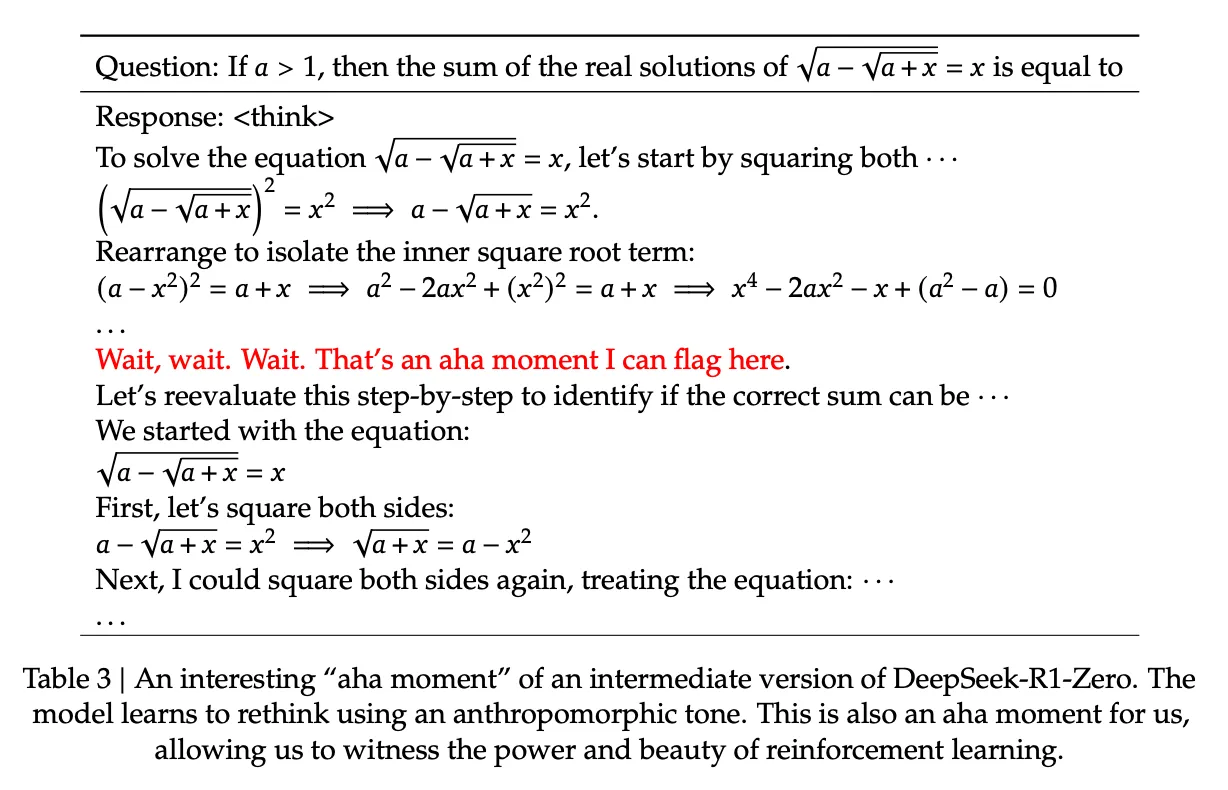

DeepSeek-R1 just proved something remarkable: simple, rule-based rewards are enough to create emergent behaviors. Process neural reward models bring unnecessary baggage: they’re vulnerable to reward hacking, demand constant retraining, and burn through compute budgets. This isn’t just convenient, it’s transformative.

This approach transforms how we think about improving RAG with RL.

Reward models for retrieval

What do rule-based rewards for RAG systems look like? The key is that every reward we design must be programmatically verifiable.

These verifiable rewards emerge naturally from different parts of the retrieval pipeline:

Relevant Document Rewards: “Did the model find the right documents that contain the answer for the query?”

Citation Rewards: “Did the answer contain citations to the documents that contain the answer?” Here, we can also give partial credit for the format, similar to how the DeepSeek-R1 paper has a format reward to encourage the model to output it’s thinking process between <think> and </think> tags.

Answer Rewards: “Did the answer contain the expected answer?” Simple, rule-based string matching that checks for the answer inside the model’s output.

Answer rewards work well for knowledge-based questions like “Who is the current US President?” but might not be applicable for complex queries that have multiple ways of expressing the same answer.

Here’s the counterintuitive insight: we don’t need perfect answer rewards. Why? Because LLMs are already exceptional at synthesis once they have the right information. And they are just going to keep getting better. The bottleneck isn’t analysis and answer generation, it’s discovery. By focusing our rewards purely on retrieval quality, we’re solving the hard problem (finding needles in proprietary haystacks) and letting the model’s inherent capabilities handle the synthesis of the final answer.

But verifiable rewards are just the beginning. The real magic happens when models start discovering their own retrieval strategies.

Learned Emergence

Most RAG systems today treat retrieval as a static pipeline: run the same searches, in the same order, for every query. But what if models could learn when and how to search, developing strategies as sophisticated as human experts?

Consider how current systems use keyword and semantic search - they typically run both for every query and combine results with a reranker. Now imagine a system that understands when each approach excels. It might open with targeted keyword searches for entity-heavy queries, then pivot to semantic search based on initial findings. Over time, it could develop hybrid strategies we haven’t even imagined, perhaps learning to identify when a query needs multi-hop reasoning and automatically constructing intermediate searches. The key here is that the system is able to learn strategies specialized for the corpus and expected queries.

These aren’t hypothetical capabilities. DeepSeek-R1 demonstrated exactly this kind of emergent behavior in mathematical reasoning. It learned to:

- Self-verify and backtrack when approaches seemed wrong

- Allocate more “thinking time” to harder problems

If models can learn problem-solving strategies in mathematics through simple rewards, imagine what they could learn about information retrieval in your specific domain. This isn’t just about making search faster or more accurate—it’s about fundamentally reimagining how AI systems interact with knowledge bases.

If models can learn problem-solving strategies in mathematics through simple rewards, imagine what they could learn about information retrieval in your specific domain. This isn’t just about making search faster or more accurate—it’s about fundamentally reimagining how AI systems interact with knowledge bases.

Search Agents

In Scaling Compute for RAG, I explored different strategies for creating RAG systems that optimize for accuracy over latency. These approaches, from query rewriting to multi-stage retrieval, all share one limitation: they’re hand-designed. But here’s what I didn’t address: how do we decide which strategies to try?

Previously, I’d literally watch human experts complete tasks and distill steps from their workflows. But this doesn’t scale. It requires high-touch access to domain experts, who often cannot even articulate their intuitive search patterns.

With RL, we might witness the birth of search agents that could:

- Discover corpus-specific patterns we never thought to look for

- Learn when to chain searches vs. when to go broad

- Develop “muscle memory” for your specific data topology

- Adapt strategies based on query complexity without explicit rules

Lawyers searching for precedents use fundamentally different mental models than marketing executives analyzing competitors. Their documents use different language, reference patterns, and organizational structures. After years of practice, they’ve internalized optimal search strategies. Why shouldn’t our systems do the same?

The Inevitable Future

RAG’s verifiability isn’t just a technical advantage; it’s a paradigm shift waiting to happen. The real revolution isn’t in the next foundation model, but rather in how we find information to feed as context to models we have today. And we’re about to let the models figure that out for themselves.

We’re moving from hand-tuned retrieval systems to self-improving search agents. Just as AlphaGo discovered Go strategies that confounded humans, these search agents will likely discover approaches we never imagined.

The blueprint is clear:

- Simple, verifiable rewards (document relevance, citations, answers)

- Let RL discover the strategies

- Watch emergence happen

The companies that figure this out first won’t just have better search. They’ll have systems that understand their data in ways their competitors can’t even conceptualize. And unlike most AI breakthroughs, this one doesn’t require massive compute or proprietary models. It just requires a little bit of courage to let go of our current methods and trust in emergence.